你没见过的分库分表原理解析和解决方案(二)

高并发三驾马车:分库分表、MQ、缓存。今天给大家带来的就是分库分表的干货解决方案,哪怕你不用我的框架也可以从中听到不一样的结局方案和实现。

一款支持自动分表分库的orm框架easy-query 帮助您解脱跨库带来的复杂业务代码,并且提供多种结局方案和自定义路由来实现比中间件更高性能的数据库访问。

-

GITHUB github地址 github.com/xuejmnet/ea…

-

GITEE gitee地址 gitee.com/xuejm/easy-…

上篇文章简单的带大家了解了分表分库的原理和聚合解析,但是还留了两个坑一个是分组如何实现一个是分页如何实现

介绍

分库分表的难题一直不是如何插入一直都是如何实现聚合查询,让用户无感知的使用才是分库分表的最终形态,所以数据坐落和数据聚合将是分库分表的重中之重,随着版本迭代easy-query正式发布了1.0.0版本相对的api基本已经稳定,分库分表和之前稍微有点不一样但是大部分都是一样的,那么这次我们将使用1.0.6来实现分库分表下的数据分组和分页。

数据准备

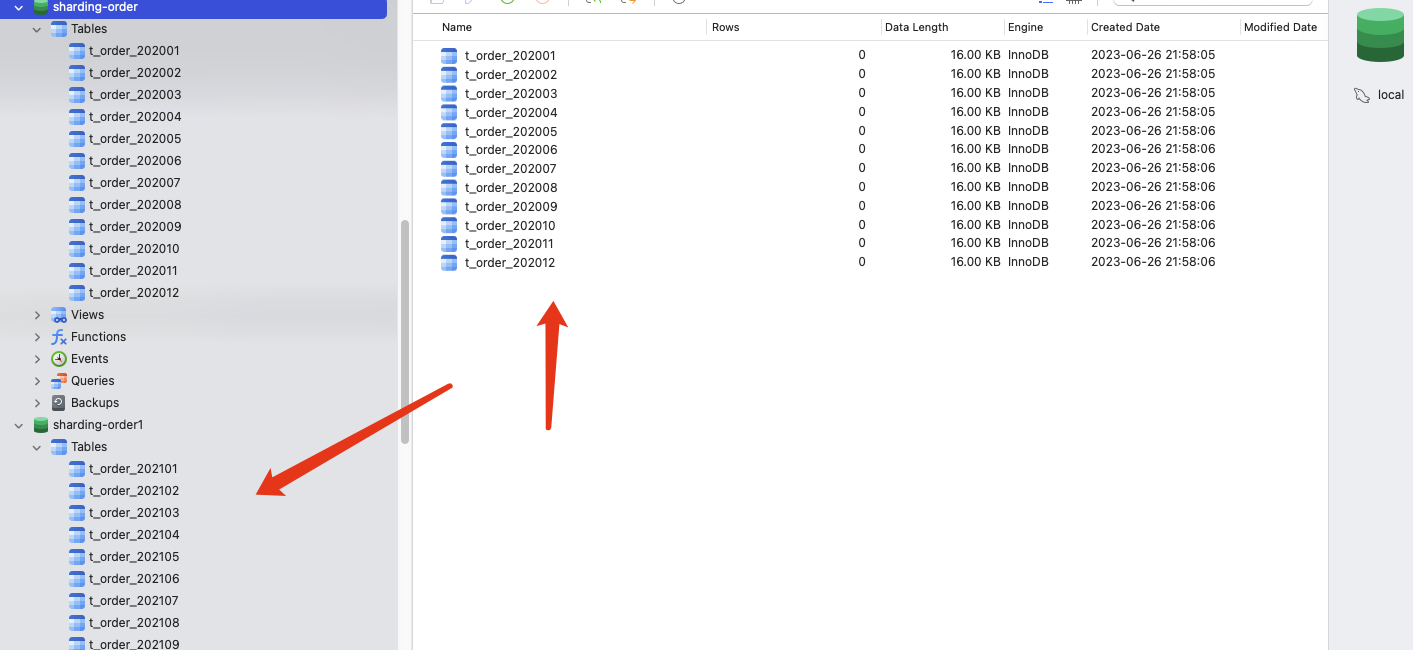

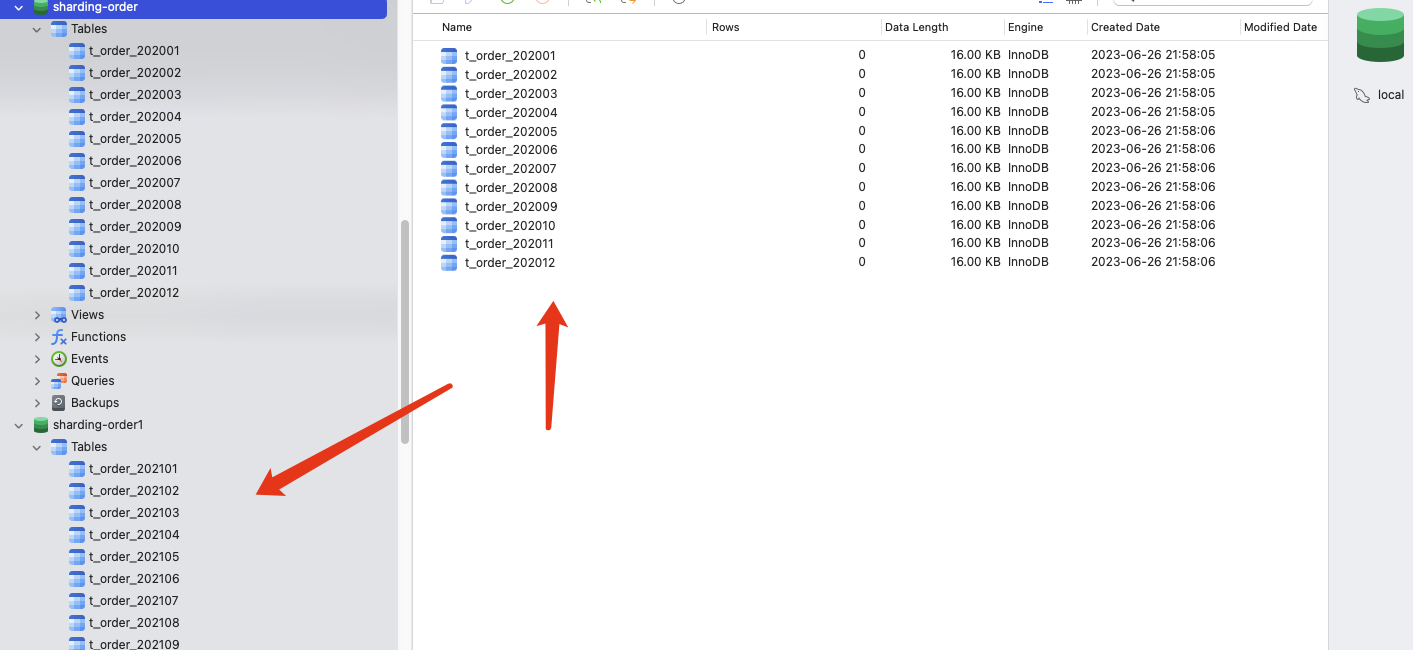

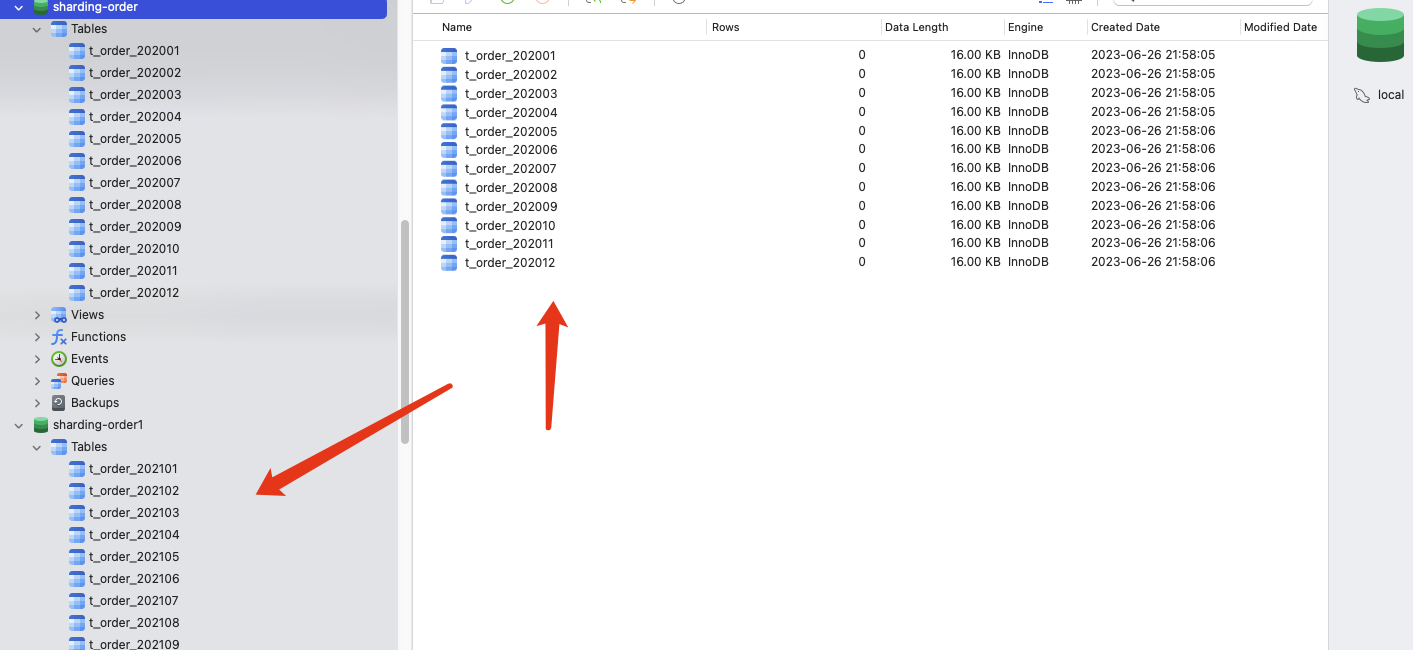

本次我们以订单为例,然后以订单创建时间进行按年分库,按月分表,最后来实现上述的分组和分页的功能

默认配置项

| 数据源名称 | 对应数据库 | 对应的订单年份 | 对应的订单表 |

|---|---|---|---|

| ds0 | sharding-order | 2020年 | t_order_202001,t_order_202002…..,t_order_202011,t_order_202012 |

| ds1 | sharding-order1 | 2021年 | t_order_202101,t_order_202102…..,t_order_202111,t_order_202112 |

| ds2 | sharding-order2 | 2022年 | t_order_202201,t_order_202202…..,t_order_202211,t_order_202212 |

| ds3 | sharding-order3 | 2023年 | t_order_202301,t_order_202302…..,t_order_202311,t_order_202312 |

添加依赖

<!--druid依赖--><dependency><groupId>com.alibaba</groupId><artifactId>druid-spring-boot-starter</artifactId><version>1.2.15</version></dependency><!-- mysql驱动 --><dependency><groupId>mysql</groupId><artifactId>mysql-connector-java</artifactId><version>8.0.28</version></dependency><dependency><groupId>org.projectlombok</groupId><artifactId>lombok</artifactId><version>1.18.18</version></dependency><dependency><groupId>com.easy-query</groupId><artifactId>sql-processor</artifactId><version>1.1.7</version><scope>compile</scope></dependency><dependency><groupId>com.easy-query</groupId><artifactId>sql-springboot-starter</artifactId><version>1.1.7</version><scope>compile</scope></dependency><!--druid依赖--> <dependency> <groupId>com.alibaba</groupId> <artifactId>druid-spring-boot-starter</artifactId> <version>1.2.15</version> </dependency> <!-- mysql驱动 --> <dependency> <groupId>mysql</groupId> <artifactId>mysql-connector-java</artifactId> <version>8.0.28</version> </dependency> <dependency> <groupId>org.projectlombok</groupId> <artifactId>lombok</artifactId> <version>1.18.18</version> </dependency> <dependency> <groupId>com.easy-query</groupId> <artifactId>sql-processor</artifactId> <version>1.1.7</version> <scope>compile</scope> </dependency> <dependency> <groupId>com.easy-query</groupId> <artifactId>sql-springboot-starter</artifactId> <version>1.1.7</version> <scope>compile</scope> </dependency><!--druid依赖--> <dependency> <groupId>com.alibaba</groupId> <artifactId>druid-spring-boot-starter</artifactId> <version>1.2.15</version> </dependency> <!-- mysql驱动 --> <dependency> <groupId>mysql</groupId> <artifactId>mysql-connector-java</artifactId> <version>8.0.28</version> </dependency> <dependency> <groupId>org.projectlombok</groupId> <artifactId>lombok</artifactId> <version>1.18.18</version> </dependency> <dependency> <groupId>com.easy-query</groupId> <artifactId>sql-processor</artifactId> <version>1.1.7</version> <scope>compile</scope> </dependency> <dependency> <groupId>com.easy-query</groupId> <artifactId>sql-springboot-starter</artifactId> <version>1.1.7</version> <scope>compile</scope> </dependency>

添加配置文件

server:port: 8081spring:profiles:active: devdatasource:type: com.alibaba.druid.pool.DruidDataSourcedriver-class-name: com.mysql.cj.jdbc.Driverurl: jdbc:mysql://127.0.0.1:3306/sharding-order?serverTimezone=GMT%2B8&characterEncoding=utf-8&useSSL=false&allowMultiQueries=true&rewriteBatchedStatements=trueusername: rootpassword: rootdruid:initial-size: 10max-active: 100easy-query:enable: truename-conversion: underlineddatabase: mysqldefault-data-source-merge-pool-size: 60default-data-source-name: ds0server: port: 8081 spring: profiles: active: dev datasource: type: com.alibaba.druid.pool.DruidDataSource driver-class-name: com.mysql.cj.jdbc.Driver url: jdbc:mysql://127.0.0.1:3306/sharding-order?serverTimezone=GMT%2B8&characterEncoding=utf-8&useSSL=false&allowMultiQueries=true&rewriteBatchedStatements=true username: root password: root druid: initial-size: 10 max-active: 100 easy-query: enable: true name-conversion: underlined database: mysql default-data-source-merge-pool-size: 60 default-data-source-name: ds0server: port: 8081 spring: profiles: active: dev datasource: type: com.alibaba.druid.pool.DruidDataSource driver-class-name: com.mysql.cj.jdbc.Driver url: jdbc:mysql://127.0.0.1:3306/sharding-order?serverTimezone=GMT%2B8&characterEncoding=utf-8&useSSL=false&allowMultiQueries=true&rewriteBatchedStatements=true username: root password: root druid: initial-size: 10 max-active: 100 easy-query: enable: true name-conversion: underlined database: mysql default-data-source-merge-pool-size: 60 default-data-source-name: ds0

新建一个订单QOrderEntity按季度进行分表分库

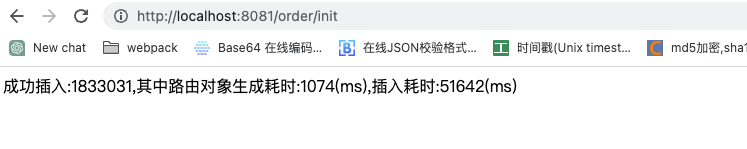

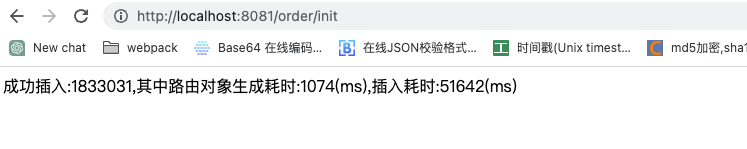

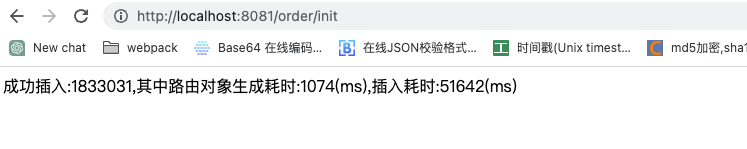

//分片表@Data@Table(value = "t_order", shardingInitializer = OrderInitializer.class)@EntityProxypublic class OrderEntity {@Column(primaryKey = true)private String id;private Integer orderNo;private String userId;@ShardingTableKey@ShardingDataSourceKeyprivate LocalDateTime createTime;}//分片初始化器@Componentpublic class OrderInitializer extends AbstractShardingMonthInitializer<OrderEntity> {/*** 分片起始时间* @return*/@Overrideprotected LocalDateTime getBeginTime() {return LocalDateTime.of(2020,1,1,0,0,0);}/*** 格式化时间到数据源* @param time* @param defaultDataSource* @return*/@Overrideprotected String formatDataSource(LocalDateTime time, String defaultDataSource) {String year = DateTimeFormatter.ofPattern("yyyy").format(time);int i = Integer.parseInt(year)-2020;return "ds"+i;}@Overridepublic void configure0(ShardingEntityBuilder<OrderEntity> builder) {}}//动态添加spring 启动后的动态数据源额外的ds1、ds2、ds3@Componentpublic class ShardingInitRunner implements ApplicationRunner {@Autowiredprivate EasyQuery easyQuery;@Overridepublic void run(ApplicationArguments args) throws Exception {Map<String, DataSource> dataSources = createDataSources();DataSourceManager dataSourceManager = easyQuery.getRuntimeContext().getDataSourceManager();for (Map.Entry<String, DataSource> stringDataSourceEntry : dataSources.entrySet()) {dataSourceManager.addDataSource(stringDataSourceEntry.getKey(), stringDataSourceEntry.getValue(), 60);}System.out.println("初始化完成");}private Map<String, DataSource> createDataSources() {HashMap<String, DataSource> stringDataSourceHashMap = new HashMap<>();for (int i = 1; i < 4; i++) {DataSource dataSource = createDataSource("ds" + i, "jdbc:mysql://127.0.0.1:3306/sharding-order" + i + "?serverTimezone=GMT%2B8&characterEncoding=utf-8&useSSL=false&allowMultiQueries=true&rewriteBatchedStatements=true", "root", "root");stringDataSourceHashMap.put("ds" + i, dataSource);}return stringDataSourceHashMap;}private DataSource createDataSource(String dsName, String url, String username, String password) {// 设置propertiesProperties properties = new Properties();properties.setProperty("name", dsName);properties.setProperty("driverClassName", "com.mysql.cj.jdbc.Driver");properties.setProperty("url", url);properties.setProperty("username", username);properties.setProperty("password", password);properties.setProperty("initialSize", "10");properties.setProperty("maxActive", "100");try {return DruidDataSourceFactory.createDataSource(properties);} catch (Exception e) {throw new EasyQueryException(e);}}}//新建分库路由@Componentpublic class OrderDataSourceRoute extends AbstractDataSourceRoute<OrderEntity> {protected Integer formatShardingValue(LocalDateTime time) {String year = time.format(DateTimeFormatter.ofPattern("yyyy"));return Integer.parseInt(year);}public boolean lessThanTimeStart(LocalDateTime shardingValue) {LocalDateTime timeYearFirstDay = EasyUtil.getYearStart(shardingValue);return shardingValue.isEqual(timeYearFirstDay);}protected Comparator<String> getDataSourceComparator(){return IgnoreCaseStringComparator.DEFAULT;}@Overrideprotected RouteFunction<String> getRouteFilter(TableAvailable table, Object shardingValue, ShardingOperatorEnum shardingOperator, boolean withEntity) {//将分片键转成对应的类型LocalDateTime shardingTime = (LocalDateTime)shardingValue ;Integer intYear = formatShardingValue(shardingTime);String dataSourceName="ds"+String.valueOf((intYear-2020));//ds0 ds1 ds2 ds3....switch (shardingOperator) {case GREATER_THAN:case GREATER_THAN_OR_EQUAL:return ds -> getDataSourceComparator().compare(dataSourceName, ds) <= 0;case LESS_THAN: {//如果小于月初那么月初的表是不需要被查询的 如果小于年初也不需要查询if (lessThanTimeStart(shardingTime)) {return ds -> getDataSourceComparator().compare(dataSourceName, ds) > 0;}return ds -> getDataSourceComparator().compare(dataSourceName, ds) >= 0;}case LESS_THAN_OR_EQUAL:return ds -> getDataSourceComparator().compare(dataSourceName, ds) >= 0;case EQUAL:return ds -> getDataSourceComparator().compare(dataSourceName,ds) == 0;default:return ds -> true;}}}//新建分表路由//分表路由由系统提供默认按月分片@Componentpublic class OrderTableRoute extends AbstractMonthTableRoute<OrderEntity> {@Overrideprotected LocalDateTime convertLocalDateTime(Object shardingValue) {return (LocalDateTime)shardingValue;}}```通过sql脚本我们创建好对应的数据库表结构初始化项目代码``javaprivate final EasyProxyQuery easyProxyQuery;@GetMapping("/init")public Object init() {long start = System.currentTimeMillis();LocalDateTime beginTime = LocalDateTime.of(2020, 1, 1, 0, 0, 0);LocalDateTime now = LocalDateTime.now();ArrayList<OrderEntity> orderEntities = new ArrayList<>();List<String> userIds = Arrays.asList("小明", "小红", "小蓝", "小黄", "小绿");int i=0;do {OrderEntity orderEntity = new OrderEntity();String timeFormat = DateTimeFormatter.ofPattern("yyyyMMddHHmmss").format(beginTime);orderEntity.setId(timeFormat);orderEntity.setOrderNo(i);orderEntity.setUserId(userIds.get(i%5));orderEntity.setCreateTime(beginTime);orderEntities.add(orderEntity);i++;beginTime=beginTime.plusMinutes(1);} while (beginTime.isBefore(now));long end = System.currentTimeMillis();long insertStart = System.currentTimeMillis();long rows = easyProxyQuery.insertable(orderEntities).executeRows();long insertEnd = System.currentTimeMillis();return "成功插入:" + rows+",其中路由对象生成耗时:"+(end-start)+"(ms),插入耗时:"+(insertEnd-insertStart)+"(ms)";}```数据初始化成功,接下来演示如何进行分组# 分组聚合分表分库下我们应该如何分组聚合代码很简单就是查询userId in ["小明", "小绿"]的然后对userId分组求对应的订单号求和````java@GetMapping("/groupByWithSumOrderNo")public Object groupByWithSumOrderNo() {long start = System.currentTimeMillis();List<String> userIds = Arrays.asList("小明", "小绿");List<OrderGroupWithSumOrderNoVO> list = easyProxyQuery.queryable(OrderEntityProxy.DEFAULT).where((filter, t) -> filter.in(t.userId(), userIds)).groupBy((group, t) -> group.column(t.userId())).select(OrderGroupWithSumOrderNoVOProxy.DEFAULT, (selector, t) -> selector.columnAs(t.userId(), r -> r.userId()).columnSumAs(t.orderNo(), r -> r.orderNoSum())).toList();long end = System.currentTimeMillis();return Arrays.asList(list,(end-start)+"(ms)");}//分片表 @Data @Table(value = "t_order", shardingInitializer = OrderInitializer.class) @EntityProxy public class OrderEntity { @Column(primaryKey = true) private String id; private Integer orderNo; private String userId; @ShardingTableKey @ShardingDataSourceKey private LocalDateTime createTime; } //分片初始化器 @Component public class OrderInitializer extends AbstractShardingMonthInitializer<OrderEntity> { /** * 分片起始时间 * @return */ @Override protected LocalDateTime getBeginTime() { return LocalDateTime.of(2020,1,1,0,0,0); } /** * 格式化时间到数据源 * @param time * @param defaultDataSource * @return */ @Override protected String formatDataSource(LocalDateTime time, String defaultDataSource) { String year = DateTimeFormatter.ofPattern("yyyy").format(time); int i = Integer.parseInt(year)-2020; return "ds"+i; } @Override public void configure0(ShardingEntityBuilder<OrderEntity> builder) { } } //动态添加spring 启动后的动态数据源额外的ds1、ds2、ds3 @Component public class ShardingInitRunner implements ApplicationRunner { @Autowired private EasyQuery easyQuery; @Override public void run(ApplicationArguments args) throws Exception { Map<String, DataSource> dataSources = createDataSources(); DataSourceManager dataSourceManager = easyQuery.getRuntimeContext().getDataSourceManager(); for (Map.Entry<String, DataSource> stringDataSourceEntry : dataSources.entrySet()) { dataSourceManager.addDataSource(stringDataSourceEntry.getKey(), stringDataSourceEntry.getValue(), 60); } System.out.println("初始化完成"); } private Map<String, DataSource> createDataSources() { HashMap<String, DataSource> stringDataSourceHashMap = new HashMap<>(); for (int i = 1; i < 4; i++) { DataSource dataSource = createDataSource("ds" + i, "jdbc:mysql://127.0.0.1:3306/sharding-order" + i + "?serverTimezone=GMT%2B8&characterEncoding=utf-8&useSSL=false&allowMultiQueries=true&rewriteBatchedStatements=true", "root", "root"); stringDataSourceHashMap.put("ds" + i, dataSource); } return stringDataSourceHashMap; } private DataSource createDataSource(String dsName, String url, String username, String password) { // 设置properties Properties properties = new Properties(); properties.setProperty("name", dsName); properties.setProperty("driverClassName", "com.mysql.cj.jdbc.Driver"); properties.setProperty("url", url); properties.setProperty("username", username); properties.setProperty("password", password); properties.setProperty("initialSize", "10"); properties.setProperty("maxActive", "100"); try { return DruidDataSourceFactory.createDataSource(properties); } catch (Exception e) { throw new EasyQueryException(e); } } } //新建分库路由 @Component public class OrderDataSourceRoute extends AbstractDataSourceRoute<OrderEntity> { protected Integer formatShardingValue(LocalDateTime time) { String year = time.format(DateTimeFormatter.ofPattern("yyyy")); return Integer.parseInt(year); } public boolean lessThanTimeStart(LocalDateTime shardingValue) { LocalDateTime timeYearFirstDay = EasyUtil.getYearStart(shardingValue); return shardingValue.isEqual(timeYearFirstDay); } protected Comparator<String> getDataSourceComparator(){ return IgnoreCaseStringComparator.DEFAULT; } @Override protected RouteFunction<String> getRouteFilter(TableAvailable table, Object shardingValue, ShardingOperatorEnum shardingOperator, boolean withEntity) { //将分片键转成对应的类型 LocalDateTime shardingTime = (LocalDateTime)shardingValue ; Integer intYear = formatShardingValue(shardingTime); String dataSourceName="ds"+String.valueOf((intYear-2020));//ds0 ds1 ds2 ds3.... switch (shardingOperator) { case GREATER_THAN: case GREATER_THAN_OR_EQUAL: return ds -> getDataSourceComparator().compare(dataSourceName, ds) <= 0; case LESS_THAN: { //如果小于月初那么月初的表是不需要被查询的 如果小于年初也不需要查询 if (lessThanTimeStart(shardingTime)) { return ds -> getDataSourceComparator().compare(dataSourceName, ds) > 0; } return ds -> getDataSourceComparator().compare(dataSourceName, ds) >= 0; } case LESS_THAN_OR_EQUAL: return ds -> getDataSourceComparator().compare(dataSourceName, ds) >= 0; case EQUAL: return ds -> getDataSourceComparator().compare(dataSourceName,ds) == 0; default: return ds -> true; } } } //新建分表路由 //分表路由由系统提供默认按月分片 @Component public class OrderTableRoute extends AbstractMonthTableRoute<OrderEntity> { @Override protected LocalDateTime convertLocalDateTime(Object shardingValue) { return (LocalDateTime)shardingValue; } } ``` 通过sql脚本我们创建好对应的数据库表结构  初始化项目代码 ``java private final EasyProxyQuery easyProxyQuery; @GetMapping("/init") public Object init() { long start = System.currentTimeMillis(); LocalDateTime beginTime = LocalDateTime.of(2020, 1, 1, 0, 0, 0); LocalDateTime now = LocalDateTime.now(); ArrayList<OrderEntity> orderEntities = new ArrayList<>(); List<String> userIds = Arrays.asList("小明", "小红", "小蓝", "小黄", "小绿"); int i=0; do { OrderEntity orderEntity = new OrderEntity(); String timeFormat = DateTimeFormatter.ofPattern("yyyyMMddHHmmss").format(beginTime); orderEntity.setId(timeFormat); orderEntity.setOrderNo(i); orderEntity.setUserId(userIds.get(i%5)); orderEntity.setCreateTime(beginTime); orderEntities.add(orderEntity); i++; beginTime=beginTime.plusMinutes(1); } while (beginTime.isBefore(now)); long end = System.currentTimeMillis(); long insertStart = System.currentTimeMillis(); long rows = easyProxyQuery.insertable(orderEntities).executeRows(); long insertEnd = System.currentTimeMillis(); return "成功插入:" + rows+",其中路由对象生成耗时:"+(end-start)+"(ms),插入耗时:"+(insertEnd-insertStart)+"(ms)"; } ```  数据初始化成功,接下来演示如何进行分组 # 分组聚合 分表分库下我们应该如何分组聚合 代码很简单就是查询userId in ["小明", "小绿"]的然后对userId分组求对应的订单号求和 ````java @GetMapping("/groupByWithSumOrderNo") public Object groupByWithSumOrderNo() { long start = System.currentTimeMillis(); List<String> userIds = Arrays.asList("小明", "小绿"); List<OrderGroupWithSumOrderNoVO> list = easyProxyQuery.queryable(OrderEntityProxy.DEFAULT) .where((filter, t) -> filter.in(t.userId(), userIds)) .groupBy((group, t) -> group.column(t.userId())) .select(OrderGroupWithSumOrderNoVOProxy.DEFAULT, (selector, t) -> selector.columnAs(t.userId(), r -> r.userId()).columnSumAs(t.orderNo(), r -> r.orderNoSum())) .toList(); long end = System.currentTimeMillis(); return Arrays.asList(list,(end-start)+"(ms)"); }//分片表 @Data @Table(value = "t_order", shardingInitializer = OrderInitializer.class) @EntityProxy public class OrderEntity { @Column(primaryKey = true) private String id; private Integer orderNo; private String userId; @ShardingTableKey @ShardingDataSourceKey private LocalDateTime createTime; } //分片初始化器 @Component public class OrderInitializer extends AbstractShardingMonthInitializer<OrderEntity> { /** * 分片起始时间 * @return */ @Override protected LocalDateTime getBeginTime() { return LocalDateTime.of(2020,1,1,0,0,0); } /** * 格式化时间到数据源 * @param time * @param defaultDataSource * @return */ @Override protected String formatDataSource(LocalDateTime time, String defaultDataSource) { String year = DateTimeFormatter.ofPattern("yyyy").format(time); int i = Integer.parseInt(year)-2020; return "ds"+i; } @Override public void configure0(ShardingEntityBuilder<OrderEntity> builder) { } } //动态添加spring 启动后的动态数据源额外的ds1、ds2、ds3 @Component public class ShardingInitRunner implements ApplicationRunner { @Autowired private EasyQuery easyQuery; @Override public void run(ApplicationArguments args) throws Exception { Map<String, DataSource> dataSources = createDataSources(); DataSourceManager dataSourceManager = easyQuery.getRuntimeContext().getDataSourceManager(); for (Map.Entry<String, DataSource> stringDataSourceEntry : dataSources.entrySet()) { dataSourceManager.addDataSource(stringDataSourceEntry.getKey(), stringDataSourceEntry.getValue(), 60); } System.out.println("初始化完成"); } private Map<String, DataSource> createDataSources() { HashMap<String, DataSource> stringDataSourceHashMap = new HashMap<>(); for (int i = 1; i < 4; i++) { DataSource dataSource = createDataSource("ds" + i, "jdbc:mysql://127.0.0.1:3306/sharding-order" + i + "?serverTimezone=GMT%2B8&characterEncoding=utf-8&useSSL=false&allowMultiQueries=true&rewriteBatchedStatements=true", "root", "root"); stringDataSourceHashMap.put("ds" + i, dataSource); } return stringDataSourceHashMap; } private DataSource createDataSource(String dsName, String url, String username, String password) { // 设置properties Properties properties = new Properties(); properties.setProperty("name", dsName); properties.setProperty("driverClassName", "com.mysql.cj.jdbc.Driver"); properties.setProperty("url", url); properties.setProperty("username", username); properties.setProperty("password", password); properties.setProperty("initialSize", "10"); properties.setProperty("maxActive", "100"); try { return DruidDataSourceFactory.createDataSource(properties); } catch (Exception e) { throw new EasyQueryException(e); } } } //新建分库路由 @Component public class OrderDataSourceRoute extends AbstractDataSourceRoute<OrderEntity> { protected Integer formatShardingValue(LocalDateTime time) { String year = time.format(DateTimeFormatter.ofPattern("yyyy")); return Integer.parseInt(year); } public boolean lessThanTimeStart(LocalDateTime shardingValue) { LocalDateTime timeYearFirstDay = EasyUtil.getYearStart(shardingValue); return shardingValue.isEqual(timeYearFirstDay); } protected Comparator<String> getDataSourceComparator(){ return IgnoreCaseStringComparator.DEFAULT; } @Override protected RouteFunction<String> getRouteFilter(TableAvailable table, Object shardingValue, ShardingOperatorEnum shardingOperator, boolean withEntity) { //将分片键转成对应的类型 LocalDateTime shardingTime = (LocalDateTime)shardingValue ; Integer intYear = formatShardingValue(shardingTime); String dataSourceName="ds"+String.valueOf((intYear-2020));//ds0 ds1 ds2 ds3.... switch (shardingOperator) { case GREATER_THAN: case GREATER_THAN_OR_EQUAL: return ds -> getDataSourceComparator().compare(dataSourceName, ds) <= 0; case LESS_THAN: { //如果小于月初那么月初的表是不需要被查询的 如果小于年初也不需要查询 if (lessThanTimeStart(shardingTime)) { return ds -> getDataSourceComparator().compare(dataSourceName, ds) > 0; } return ds -> getDataSourceComparator().compare(dataSourceName, ds) >= 0; } case LESS_THAN_OR_EQUAL: return ds -> getDataSourceComparator().compare(dataSourceName, ds) >= 0; case EQUAL: return ds -> getDataSourceComparator().compare(dataSourceName,ds) == 0; default: return ds -> true; } } } //新建分表路由 //分表路由由系统提供默认按月分片 @Component public class OrderTableRoute extends AbstractMonthTableRoute<OrderEntity> { @Override protected LocalDateTime convertLocalDateTime(Object shardingValue) { return (LocalDateTime)shardingValue; } } ``` 通过sql脚本我们创建好对应的数据库表结构  初始化项目代码 ``java private final EasyProxyQuery easyProxyQuery; @GetMapping("/init") public Object init() { long start = System.currentTimeMillis(); LocalDateTime beginTime = LocalDateTime.of(2020, 1, 1, 0, 0, 0); LocalDateTime now = LocalDateTime.now(); ArrayList<OrderEntity> orderEntities = new ArrayList<>(); List<String> userIds = Arrays.asList("小明", "小红", "小蓝", "小黄", "小绿"); int i=0; do { OrderEntity orderEntity = new OrderEntity(); String timeFormat = DateTimeFormatter.ofPattern("yyyyMMddHHmmss").format(beginTime); orderEntity.setId(timeFormat); orderEntity.setOrderNo(i); orderEntity.setUserId(userIds.get(i%5)); orderEntity.setCreateTime(beginTime); orderEntities.add(orderEntity); i++; beginTime=beginTime.plusMinutes(1); } while (beginTime.isBefore(now)); long end = System.currentTimeMillis(); long insertStart = System.currentTimeMillis(); long rows = easyProxyQuery.insertable(orderEntities).executeRows(); long insertEnd = System.currentTimeMillis(); return "成功插入:" + rows+",其中路由对象生成耗时:"+(end-start)+"(ms),插入耗时:"+(insertEnd-insertStart)+"(ms)"; } ```  数据初始化成功,接下来演示如何进行分组 # 分组聚合 分表分库下我们应该如何分组聚合 代码很简单就是查询userId in ["小明", "小绿"]的然后对userId分组求对应的订单号求和 ````java @GetMapping("/groupByWithSumOrderNo") public Object groupByWithSumOrderNo() { long start = System.currentTimeMillis(); List<String> userIds = Arrays.asList("小明", "小绿"); List<OrderGroupWithSumOrderNoVO> list = easyProxyQuery.queryable(OrderEntityProxy.DEFAULT) .where((filter, t) -> filter.in(t.userId(), userIds)) .groupBy((group, t) -> group.column(t.userId())) .select(OrderGroupWithSumOrderNoVOProxy.DEFAULT, (selector, t) -> selector.columnAs(t.userId(), r -> r.userId()).columnSumAs(t.orderNo(), r -> r.orderNoSum())) .toList(); long end = System.currentTimeMillis(); return Arrays.asList(list,(end-start)+"(ms)"); }

[[{"orderNoSum":993365517,"userId":"小明"},{"orderNoSum":992998911,"userId":"小绿"}],"1768(ms)"]共计耗时约1.8秒

@GetMapping("/groupByWithSumOrderNoOrderByUserId")public Object groupByWithSumOrderNoOrderByUserId() {long start = System.currentTimeMillis();List<String> userIds = Arrays.asList("小明", "小绿");List<OrderGroupWithSumOrderNoVO> list = easyProxyQuery.queryable(OrderEntityProxy.DEFAULT).where((filter, t) -> filter.in(t.userId(), userIds)).groupBy((group, t) -> group.column(t.userId())).orderByAsc((order,t)->order.column(t.userId())).select(OrderGroupWithSumOrderNoVOProxy.DEFAULT, (selector, t) -> selector.columnAs(t.userId(), r -> r.userId()).columnSumAs(t.orderNo(), r -> r.orderNoSum())).toList();long end = System.currentTimeMillis();return Arrays.asList(list,(end-start)+"(ms)");}@GetMapping("/groupByWithSumOrderNoOrderByUserId") public Object groupByWithSumOrderNoOrderByUserId() { long start = System.currentTimeMillis(); List<String> userIds = Arrays.asList("小明", "小绿"); List<OrderGroupWithSumOrderNoVO> list = easyProxyQuery.queryable(OrderEntityProxy.DEFAULT) .where((filter, t) -> filter.in(t.userId(), userIds)) .groupBy((group, t) -> group.column(t.userId())) .orderByAsc((order,t)->order.column(t.userId())) .select(OrderGroupWithSumOrderNoVOProxy.DEFAULT, (selector, t) -> selector.columnAs(t.userId(), r -> r.userId()).columnSumAs(t.orderNo(), r -> r.orderNoSum())) .toList(); long end = System.currentTimeMillis(); return Arrays.asList(list,(end-start)+"(ms)"); }@GetMapping("/groupByWithSumOrderNoOrderByUserId") public Object groupByWithSumOrderNoOrderByUserId() { long start = System.currentTimeMillis(); List<String> userIds = Arrays.asList("小明", "小绿"); List<OrderGroupWithSumOrderNoVO> list = easyProxyQuery.queryable(OrderEntityProxy.DEFAULT) .where((filter, t) -> filter.in(t.userId(), userIds)) .groupBy((group, t) -> group.column(t.userId())) .orderByAsc((order,t)->order.column(t.userId())) .select(OrderGroupWithSumOrderNoVOProxy.DEFAULT, (selector, t) -> selector.columnAs(t.userId(), r -> r.userId()).columnSumAs(t.orderNo(), r -> r.orderNoSum())) .toList(); long end = System.currentTimeMillis(); return Arrays.asList(list,(end-start)+"(ms)"); }

[[{"orderNoSum":993365517,"userId":"小明"},{"orderNoSum":992998911,"userId":"小绿"}],"1699(ms)"]

我们非常快速的获取了查询结果,那么这个结果是如何获取的呢接下来我将讲解分组聚合的原理并且会讲解order by对group在分片中的影响是有多大的影响

分组求和

原理解析这边以两个分片来进行聚合

将sql进行路由解析后分别对两个分片节点进行查询聚合,然后合并到内存中分别对group和sum进行处理实现无感知分片聚合group,但是大家可能已经发现了这个是sum那么各个节点的数据可以相加如果是avg呢应该怎么办,接下来我来讲解group下如何进行avg的数据聚合查询。

分组取平均数

首先我们来假设一个表a和表b两个表里面数据如下

![图片[1]-你没见过的分库分表原理解析和解决方案(二)-五八三](https://583.cn/wp-content/uploads/2023/06/602b5d5112a83e1b966189858ea613f5.jpg) 结果错误!!!

结果错误!!!

可以看到单纯的通过内存来进行平均值的聚合是不正确的,因为只有当各个分片内的数据和分片数一样才可以简单的avg,那么我们应该如何实现分组求平均呢。

1.avg本质等于什么?

我们都知道avg=sum/count通过这个公式avg是不可以简单分片聚合的那么如果我们知道sum和count呢,是不是就可以知道avg了,在退一万步我们已经知道avg的情况下是不是只需要知道sum或者count也可以算出对应的第三个值

2.如何实现

- 如果用户存在group+avg那么强制要求进行对应avg字段也进行sum或者count的其中一个

- 如果发现用户存在group+avg那么就自动重写添加sum或者count的查询

那么之前的group+avg就会变成group+avg+sum

通过上述描述我们应该可以清晰的看到应该如何针对各个节点的分组求平均值来处理正确的方法

内存分组聚合

上述所有例子我们都是通过内存分组聚合来实现各个节点的分组聚合,缺点就是需要先把各个节点的数据存储到内存中然后再次进行分组,那么如果各个节点的数据过多那么在分组聚合的第一阶段可能会导致内存的大量消耗,所以接下来我将给大家讲解流式分组聚合

流式分组聚合

上个文章我们讲解过流式聚合,那么流式分组聚合和流式聚合的差别在哪呢,很明显就是分组这个关键字上,我们如何保证下一个next所需的数据就是我上一个需要group的呢

答案就是order by

只要各个节点的order by后的排序字段和编程语言在内存中的一样即可保证

easy-query是如何实现的

List<String> userIds = Arrays.asList("小明", "小绿");List<OrderGroupWithAvgOrderNoVO> list = easyProxyQuery.queryable(OrderEntityProxy.DEFAULT).where((filter, t) -> filter.in(t.userId(), userIds)).groupBy((group, t) -> group.column(t.userId())).orderByAsc((order, t) -> order.column(t.userId())).select(OrderGroupWithAvgOrderNoVOProxy.DEFAULT, (selector, t) -> selector.columnAs(t.userId(), r -> r.userId()).columnAvgAs(t.orderNo(), r -> r.orderNoAvg())).toList();//生成的sql 会自动补齐count和sum来保证数据结果的正确性SELECT t.`user_id` AS `user_id`,AVG(t.`order_no`) AS `order_no_avg`,COUNT(t.`order_no`) AS `orderNoRewriteCount`,SUM(t.`order_no`) AS `orderNoRewriteSum`FROM `t_order_202211` tWHERE t.`user_id` IN (?,?)GROUP BY t.`user_id`ORDER BY t.`user_id` ASC[[{"orderNoAvg":916515.0000,"userId":"小明"},{"orderNoAvg":916516.5000,"userId":"小绿"}],"1924(ms)"]分别对其进行求和和求count[[{"orderNoSum":336000814605,"userId":"小明"}],"914(ms)"][[{"orderNoAvg":366607,"userId":"小明"}],"777(ms)"]916515.0000=336000814605/366607 所以结果是正确的List<String> userIds = Arrays.asList("小明", "小绿"); List<OrderGroupWithAvgOrderNoVO> list = easyProxyQuery.queryable(OrderEntityProxy.DEFAULT) .where((filter, t) -> filter.in(t.userId(), userIds)) .groupBy((group, t) -> group.column(t.userId())) .orderByAsc((order, t) -> order.column(t.userId())) .select(OrderGroupWithAvgOrderNoVOProxy.DEFAULT, (selector, t) -> selector.columnAs(t.userId(), r -> r.userId()).columnAvgAs(t.orderNo(), r -> r.orderNoAvg())) .toList(); //生成的sql 会自动补齐count和sum来保证数据结果的正确性 SELECT t.`user_id` AS `user_id`,AVG(t.`order_no`) AS `order_no_avg`,COUNT(t.`order_no`) AS `orderNoRewriteCount`,SUM(t.`order_no`) AS `orderNoRewriteSum` FROM `t_order_202211` t WHERE t.`user_id` IN (?,?) GROUP BY t.`user_id` ORDER BY t.`user_id` ASC [[{"orderNoAvg":916515.0000,"userId":"小明"},{"orderNoAvg":916516.5000,"userId":"小绿"}],"1924(ms)"] 分别对其进行求和和求count [[{"orderNoSum":336000814605,"userId":"小明"}],"914(ms)"] [[{"orderNoAvg":366607,"userId":"小明"}],"777(ms)"] 916515.0000=336000814605/366607 所以结果是正确的List<String> userIds = Arrays.asList("小明", "小绿"); List<OrderGroupWithAvgOrderNoVO> list = easyProxyQuery.queryable(OrderEntityProxy.DEFAULT) .where((filter, t) -> filter.in(t.userId(), userIds)) .groupBy((group, t) -> group.column(t.userId())) .orderByAsc((order, t) -> order.column(t.userId())) .select(OrderGroupWithAvgOrderNoVOProxy.DEFAULT, (selector, t) -> selector.columnAs(t.userId(), r -> r.userId()).columnAvgAs(t.orderNo(), r -> r.orderNoAvg())) .toList(); //生成的sql 会自动补齐count和sum来保证数据结果的正确性 SELECT t.`user_id` AS `user_id`,AVG(t.`order_no`) AS `order_no_avg`,COUNT(t.`order_no`) AS `orderNoRewriteCount`,SUM(t.`order_no`) AS `orderNoRewriteSum` FROM `t_order_202211` t WHERE t.`user_id` IN (?,?) GROUP BY t.`user_id` ORDER BY t.`user_id` ASC [[{"orderNoAvg":916515.0000,"userId":"小明"},{"orderNoAvg":916516.5000,"userId":"小绿"}],"1924(ms)"] 分别对其进行求和和求count [[{"orderNoSum":336000814605,"userId":"小明"}],"914(ms)"] [[{"orderNoAvg":366607,"userId":"小明"}],"777(ms)"] 916515.0000=336000814605/366607 所以结果是正确的

分页聚合

如果您在项目中使用过分库分表,那么一定知道分库分表的难点在哪里,那么就是聚合数据范围和跨表数据返回,如果跨表数据返回再有一个难点那么就是分片数据跨分片分页,并且支持条件排序等处理操作。

内存分页

内存分页作为最简单的分页方法,在前几页的处理中有着非常方便的和高效的实用,具体原理如下

--原始sqlselect * from order where time between 2020 and 2021 order by time limit 1,5假如他被路由到2020和2021两张表那么要获取前10条数据应该怎么写select * from order_2020 where time between 2020 and 2021 order by time limit 1,5select * from order_2021 where time between 2020 and 2021 order by time limit 1,5--原始sql select * from order where time between 2020 and 2021 order by time limit 1,5 假如他被路由到2020和2021两张表那么要获取前10条数据应该怎么写 select * from order_2020 where time between 2020 and 2021 order by time limit 1,5 select * from order_2021 where time between 2020 and 2021 order by time limit 1,5--原始sql select * from order where time between 2020 and 2021 order by time limit 1,5 假如他被路由到2020和2021两张表那么要获取前10条数据应该怎么写 select * from order_2020 where time between 2020 and 2021 order by time limit 1,5 select * from order_2021 where time between 2020 and 2021 order by time limit 1,5

分别对两张表进行前10条数据的获取然后再内存中就有20条数据,针对这20条数据进行order by time的相同操作,然后获取前10条

那么如果是获取第二页呢

--原始sqlselect * from order where time between 2020 and 2021 order by time limit 2,5假如他被路由到2020和2021两张表那么要获取前10条数据应该怎么写--错误的做法select * from order_2020 where time between 2020 and 2021 order by time limit 2,5select * from order_2021 where time between 2020 and 2021 order by time limit 2,5--原始sql select * from order where time between 2020 and 2021 order by time limit 2,5 假如他被路由到2020和2021两张表那么要获取前10条数据应该怎么写 --错误的做法 select * from order_2020 where time between 2020 and 2021 order by time limit 2,5 select * from order_2021 where time between 2020 and 2021 order by time limit 2,5--原始sql select * from order where time between 2020 and 2021 order by time limit 2,5 假如他被路由到2020和2021两张表那么要获取前10条数据应该怎么写 --错误的做法 select * from order_2020 where time between 2020 and 2021 order by time limit 2,5 select * from order_2021 where time between 2020 and 2021 order by time limit 2,5

通过上图我们清晰地可以知道这个解析是错误那么正确的应该是怎么样的呢

--原始sqlselect * from order where time between 2020 and 2021 order by time limit 2,5假如他被路由到2020和2021两张表那么要获取前10条数据应该怎么写--正确的做法select * from order_2020 where time between 2020 and 2021 order by time limit 1,10select * from order_2021 where time between 2020 and 2021 order by time limit 1,10--原始sql select * from order where time between 2020 and 2021 order by time limit 2,5 假如他被路由到2020和2021两张表那么要获取前10条数据应该怎么写 --正确的做法 select * from order_2020 where time between 2020 and 2021 order by time limit 1,10 select * from order_2021 where time between 2020 and 2021 order by time limit 1,10--原始sql select * from order where time between 2020 and 2021 order by time limit 2,5 假如他被路由到2020和2021两张表那么要获取前10条数据应该怎么写 --正确的做法 select * from order_2020 where time between 2020 and 2021 order by time limit 1,10 select * from order_2021 where time between 2020 and 2021 order by time limit 1,10

考虑到最坏的情况就是6-10全部在左侧或者全部在右侧,因为数据的分布无法知晓所以我们应该以最坏的情况来获取数据然后获取6-10条数据

好了这样我们就实现了如何用内存来实现

int pageIndex=xint pageSize=y;那么重写后的sql应该是int pageIndex=1int pageSize=x*y;int pageIndex=x int pageSize=y; 那么重写后的sql应该是 int pageIndex=1 int pageSize=x*y;int pageIndex=x int pageSize=y; 那么重写后的sql应该是 int pageIndex=1 int pageSize=x*y;

虽然我们发现了如何正确的获取分页数据但是也存在一个非常严重的问题,就是深度分页导致的内存爆炸,因为每个分片的获取对象都是xy那么如果有n个分片被本次查询覆盖那么就需要获取至多xy*n条数据到内存,其中pageIndex就是x是用户自行选择的所以会存在x的大小不确定这样就会导致内存的严重消耗甚至oom。

流式分页

既然我们已经知道了内存分页的缺点那么是否有办法针对上述缺点进行规避或者优化呢,答案是有的就是流式分页,所谓流式分页就是利用ResultSet的延迟获取特点,配合之前的流式获取来适当性的放弃头部数据来达到节省内存的效果.

流式分页是如何优化程序的

int pageIndex=xint pageSize=y;那么重写后的sql应该是int pageIndex=1int pageSize=x*y;int pageIndex=x int pageSize=y; 那么重写后的sql应该是 int pageIndex=1 int pageSize=x*y;int pageIndex=x int pageSize=y; 那么重写后的sql应该是 int pageIndex=1 int pageSize=x*y;

因为jdbc的resultset延迟获取的特性,所以每次调用next才会将数据取到客户端,利用这个特性可以将前5条数据获取到并且放弃来实现内存严格控制,并且满足获取条数后后面的11-20是不需要获取的,有效的避免网络I/O的浪费和大大提高性能。

虽然流式分页可以大大的提高内存利用率,并且可以用最少的I/O次数来获取正确的分页数量由原先的xyn变成x*y,但是我们会发现在深度分页的情况下网络io还是需要实打实的获取到客户端进行判断,所以在深分页下不仅数据库压力大,客户端网络I/O压力也大,并且在页数很大的情况下默认起始页和结束页是默认显示在分页组件上的那么就就会导致用户很容易点到页尾导致程序进入卡死状态并且响应变慢从而拖慢应用

反排分页

跨分片深度分页解决方案

-

1.让用户妥协只支持瀑布流分页,就是app滚动相似的分页,不支持跳页只支持next页放弃count仅limit获取,并且无法自定义排序,业务上直接禁止页尾跳页直接避免问题

-

2.反向排序分页依然是count+limit的组合

我们都知道跨分片的聚合是因为深度分页慢是因为网络I/O的大量读取,所以如果我们可以保证网络I/O的读取次数变少那么是否就能解决这个问题

何谓反向分页首先我们来看一张图

原来我们需要跳过大量的网络I/O才能获取的正确数据如果我们有反向分页那么只需要跳过少量的数据就可以实现深度分页,并且因为大部分业务场景都支持跳页所以count的查询是一定会有的,我们只需要对各个分片的count进行第一次查询的获取那么就可以保证在深度分页下的反向排序分页

通过对order by的反向置换并且将offset重新计算来四线深度跨分片分页下I/O的极大减少保证正序的健壮性

顺序分页

到目前为止我们的页首和页尾节点的分页已经解决了,那么针对分页的中间部分改怎么办呢,是否还有优化方案呢,答案是有的但是这个优化方案对于分片方式有特殊的要求并没有前两种的通用化,顺序分片。

什么叫做顺序分页,顺序分页就是例如按时间按月分表,按年分表,按天分表,每张的内部数据永远是有一个特殊的排序字段可以让其依次从小到大排列。如果分片是这种特性的分片那么可以保证在order by这个特殊字段的时候几乎可以做到除了页首和页尾甚至中间任意节点的高性能

![图片[2]-你没见过的分库分表原理解析和解决方案(二)-五八三](https://583.cn/wp-content/uploads/2023/06/d4e0d4effb7c648ce229812caa8d8bae.jpg)

到目前为止如果您是顺序分页并且排序字段是顺序字段那么可以保证跨分片的查询和普通查询基本没有两样,但是我们其实还是发现了一个问题就是每次查询都需要count一下这个其实是很费时间的,并且基本上如果是大数量的情况下基本大致数据不需要更新或者只需要更新最新的一页即可

指定分页

基于上述问题easy-query实现了指定分页,就是可以通过第一次的分片记录下当前条件的各个节点的count数据,那么接下来的查询如果条件没有变化就不需要再进行count了,并且针对最新节点依然可以选择单独查询count从而来保证数据的准确性。

最后

通过上述几个讲述您应该已经对分表分库有了一个全新的理解和优化,接下来的几个篇章我将带你通过简单的实现和高级的抽象来完成easy-query的全新orm的变成之旅让分表分库变得非常简单且非常高效,并且会提出多种解决方案来实现老旧数据的迁移,数据分片不均匀,多字段分片索引的种种解决方案。

如果觉得有用请点击star谢谢大家了

QQ群:170029046

-

GITHUB github地址 github.com/xuejmnet/ea…

-

GITEE gitee地址 gitee.com/xuejm/easy-…